Blog

Access industry insights that will take your organization—and your people—to the next level.

Featured resources:

K-12 Vaping Prevention: Strategies to Change Attitudes and Behavior

Aug 16, 2023 min read

New OSHA Recordkeeping Requirements in 2024

Aug 10, 2023 7 min read

Meet NFPA Standards with Vector Solutions

Aug 03, 2023 min read

10 Daily Workplace Safety Tips in Manufacturing

Apr 24, 2023 7 min read

Filter

Latest resources

Helping Your School Comply with Professional Development Policies for Trauma-Informed Practices

April 23, 2024 4 min read

The Human Side of the Highway: Supporting Truck Driver Mental Health

April 22, 2024 5 min read

Mental Health Awareness Month 2024

April 22, 2024 4 min read

Workplace Violence Training to Stay Compliant

April 19, 2024 9 min read

Vector Expert Certification 3-Part Series: Unlock the Power of Your Digital Prevention Programs

April 17, 2024 2 min read

New Law Enforcement Online Training: Ethical Decision Making and Interacting with Special Populations

April 16, 2024 4 min read

Everything to Know About MSHA Part 48 Training

April 15, 2024 7 min read

Warehouse Training: Courses Proven to Boost Employee Development and Retention

April 15, 2024 6 min read

Top 10 Oil and Gas Training Courses to Include in Training Plans

April 10, 2024 8 min read

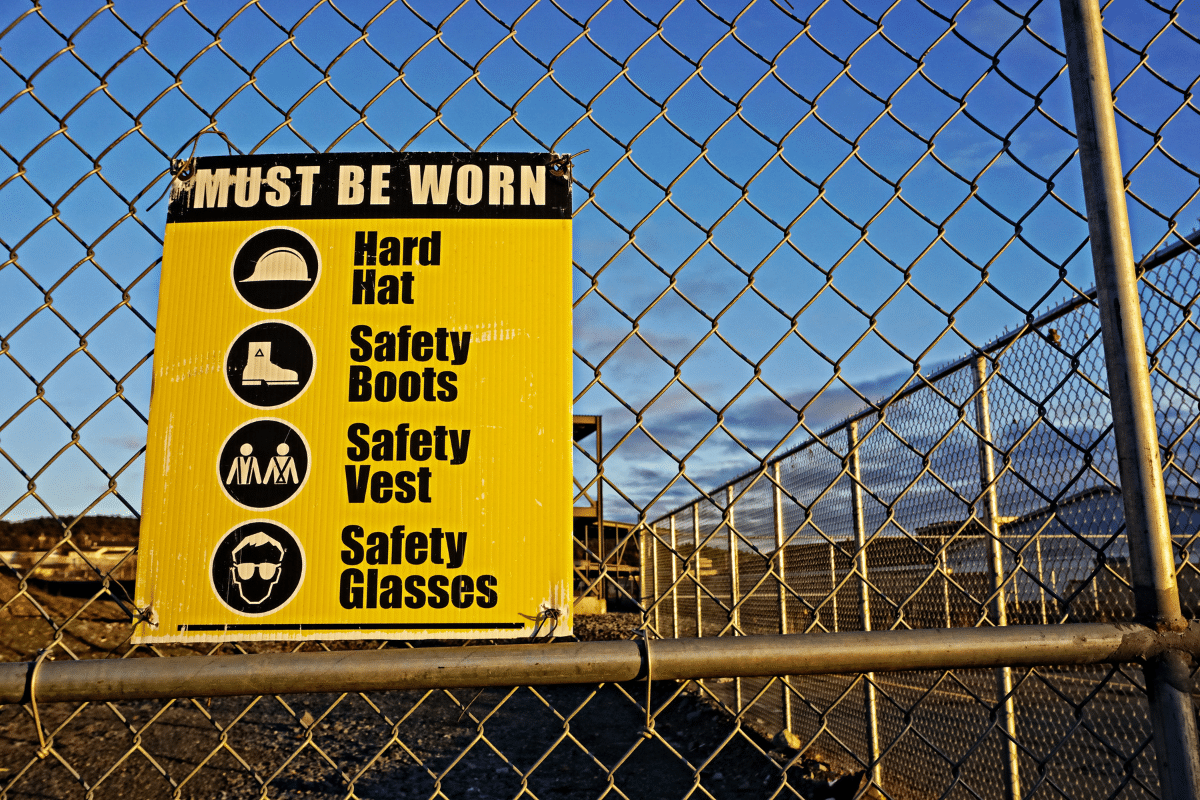

Seven Key Health Hazards in Construction

April 10, 2024 6 min read

Navigating the Future: AI Architecture and Its Impact on Design

April 9, 2024 4 min read

Food Manufacturing Sanitation Training: The Secret Ingredient for Quality and Safety

April 8, 2024 7 min read

TMS vs LMS: Why A Police Training Management System Is The Right Pick

April 8, 2024 7 min read

Choosing the Right Learning Management System (LMS) for Your Organization

April 8, 2024 6 min read

Oil and Gas Safety: Top Hazards and Solutions

April 3, 2024 7 min read

Explore our software solutions designed to help your organization succeed

Request a Demo